Create Cluster

USB storage devices are blocked by default. If accessing USB devices is needed, please set the tuning option cubesys.probeusb to true using CLI > tuning > configure after logging in as admin.

Prerequisite

User bigstack-k8s, project bigstack-k8s will be used during this demontration.

-

A project for Kubernetes has been created, and the user's Kubernetes permissions have been set to Administrator. See Create project for Kubernetes

-

Amphora image has been imported

- Download Amphora image: amphora-x64-haproxy_yoga

- Use

scpto upload the downloaded image to the Glance image directory/mnt/cephfs/glance- From a computer with management access to your cluster VIP:

scp amphora-x64-haproxy_yoga.qcow2 root@<cluster-vip>:/mnt/cephfs/glance/

- From a computer with management access to your cluster VIP:

- Login to the admin shell

- Run the following command to import the load balancer image:

image import_lb

cc1> image import_lb

1: usb

2: local

Enter index: 2

1: amphora-x64-haproxy-yoga.qcow2

Enter index: 1

Importing...

[16:25:04] Converting image to RAW format ...

(100.00/100%)

[16:25:11] Creating image amphora-x64-haproxy ...

[=============================>] 100%

aa93010b-eb36-4357-b1cf-fea46958908f

[16:26:07] Finished creating image amphora-x64-haproxy

Importing complete. It is safe to remove the local image file.

- A Linux image has been imported and is ready for use in deployments.

For the purposes of this demonstration, we will use the ubuntu2404.qcow2 image as the base system image.

- Visit the CubeCOS Downloads page to obtain a supported image (e.g., ubuntu2404.qcow2).

- Use scp to transfer the image to the Glance image store:

/mnt/cephfs/glance- Run this command from a workstation or jump host with network access to the cluster VIP.:

scp ubuntu2404.qcow2 root@<cluster-vip>:/mnt/cephfs/glance/

- Run this command from a workstation or jump host with network access to the cluster VIP.:

- Log into the admin shell

- Run the following command to import the load balancer image:

image import

cc1> image import

1: usb

2: local

Enter index: 2

1: amphora-x64-haproxy-yoga.qcow2

2: ubuntu2404.qcow2

Enter index: 2

Specify image name: ubuntu_2404

Select domain:

1: default

Enter index: 1

Select tenant:

1: admin

2: bigstack-k8s

Enter index: 1

Bootable image or volume:

1: glance-images

2: cinder-volumes

Enter index: 1

Visibility:

1: public

2: private

Enter index: 1

Importing...

[16:29:57] Converting image to RAW format ...

(100.00/100%)

[16:30:09] Creating image ubuntu_2404 ...

[=============================>] 100%

ebfa199b-12d2-4a7c-8a8e-aef452f71750

[16:31:39] Finished creating image ubuntu_2404

Importing ubuntu_2404 complete. It is safe to remove the local image file.

Driver setting and cloud credential creation

-

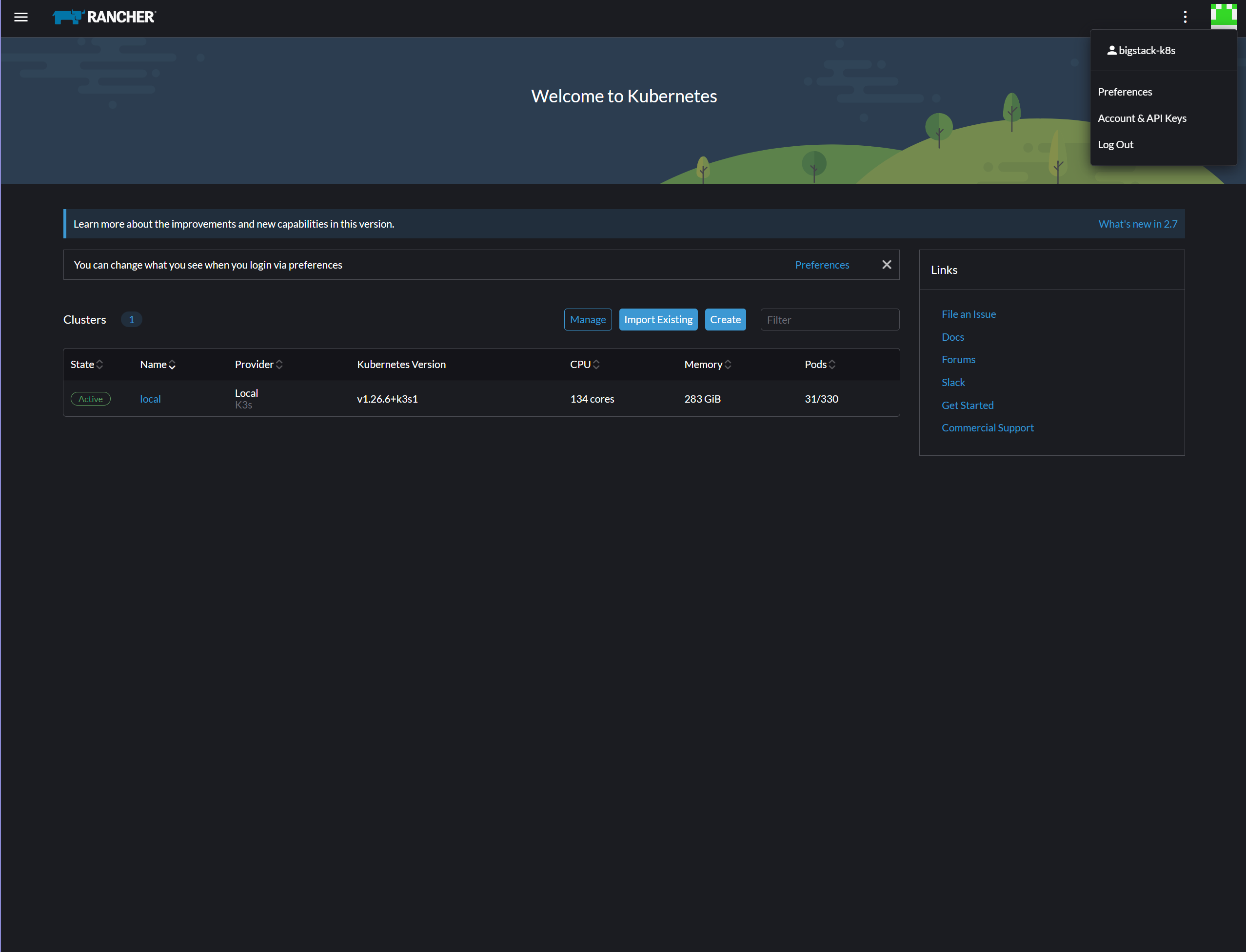

Login Rancher with keycloak user

bigstack-k8s.

-

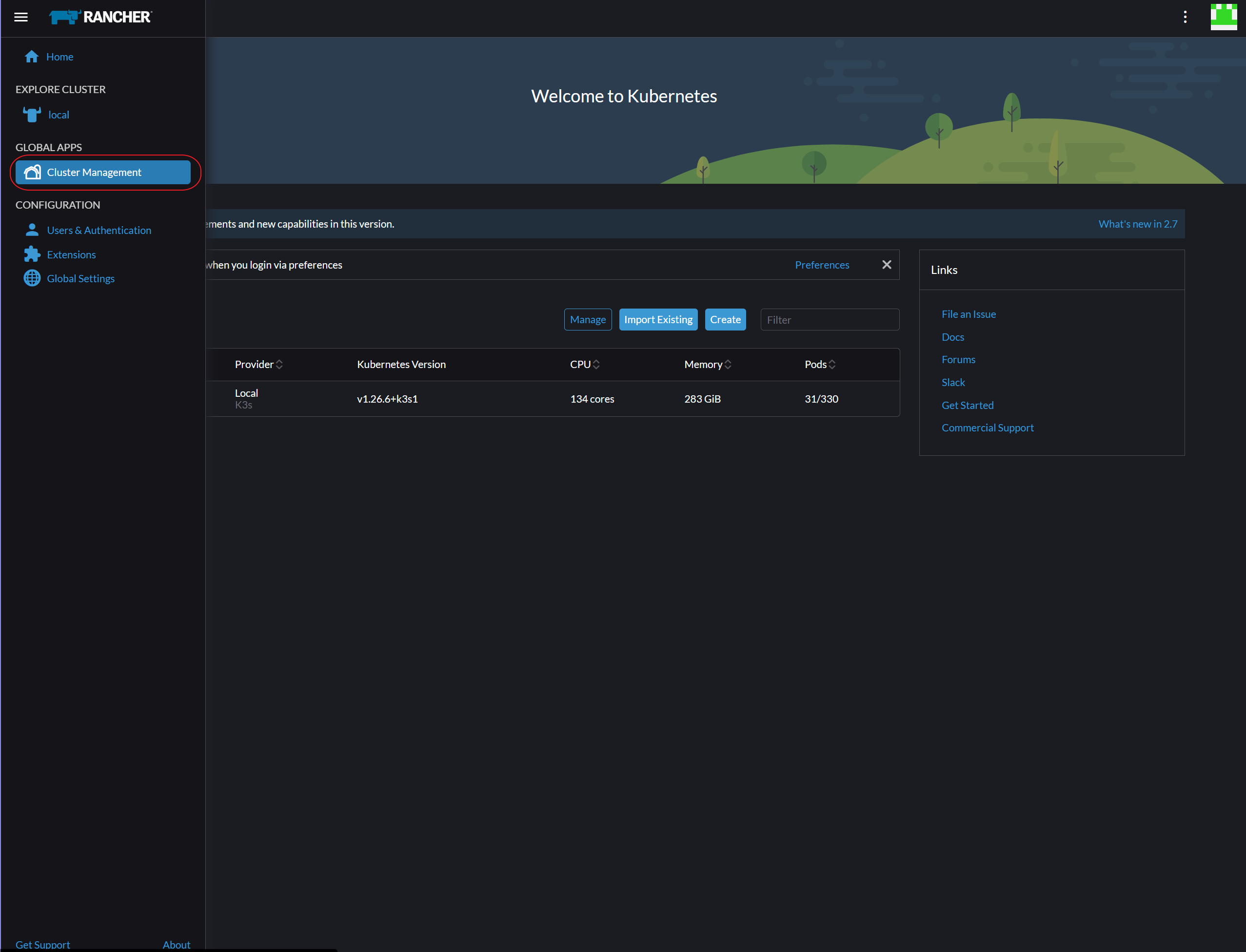

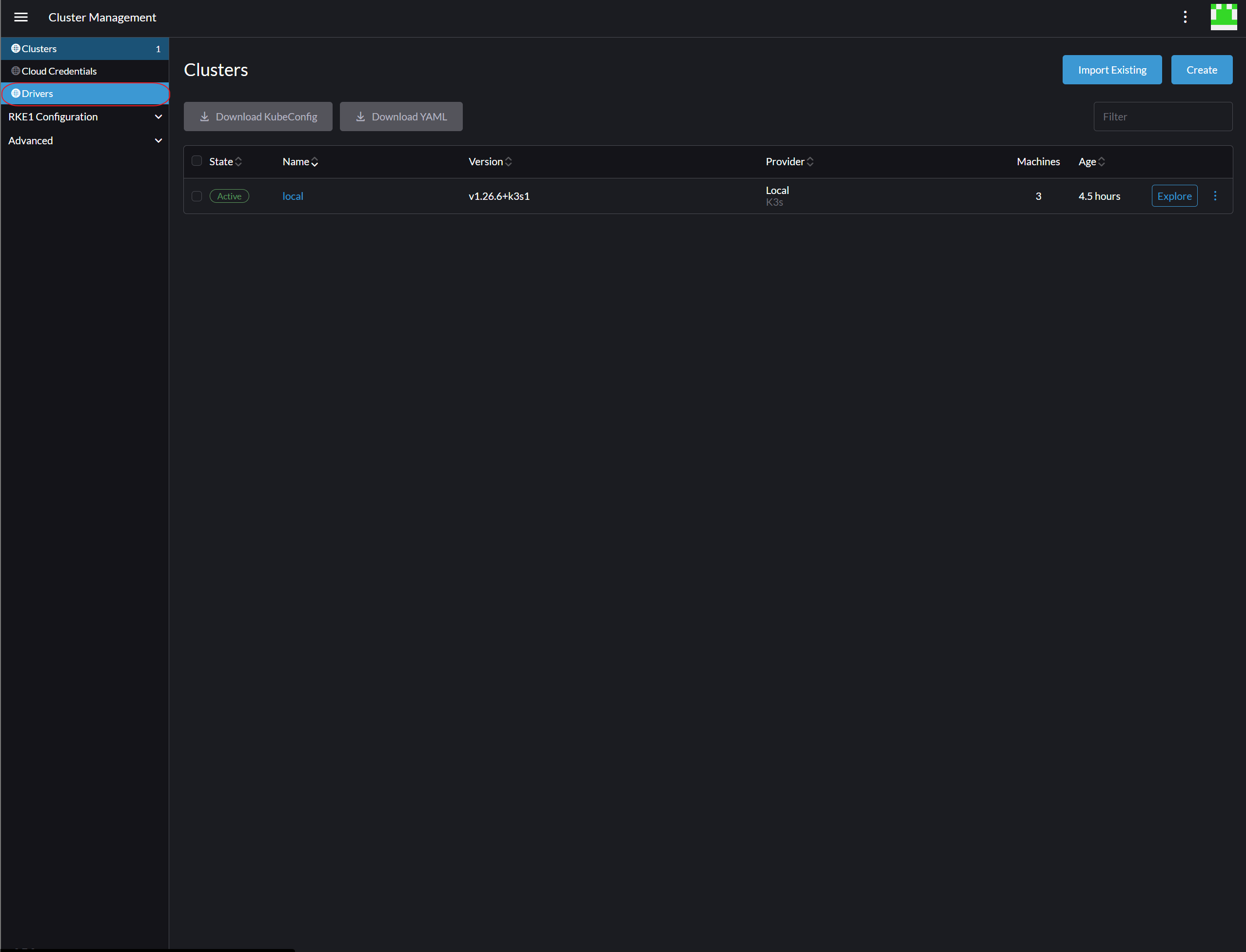

Navigate to

Cluster Management.

-

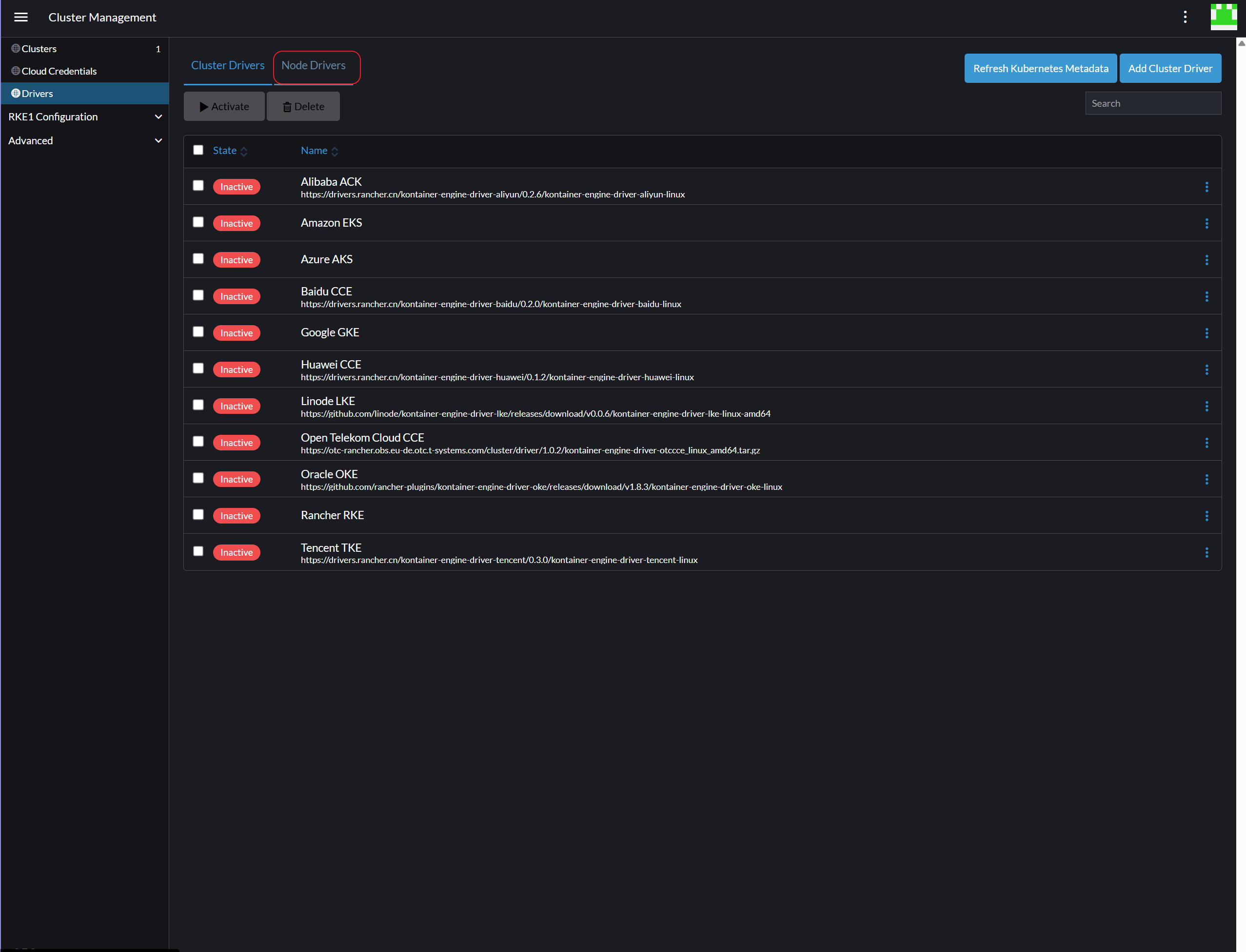

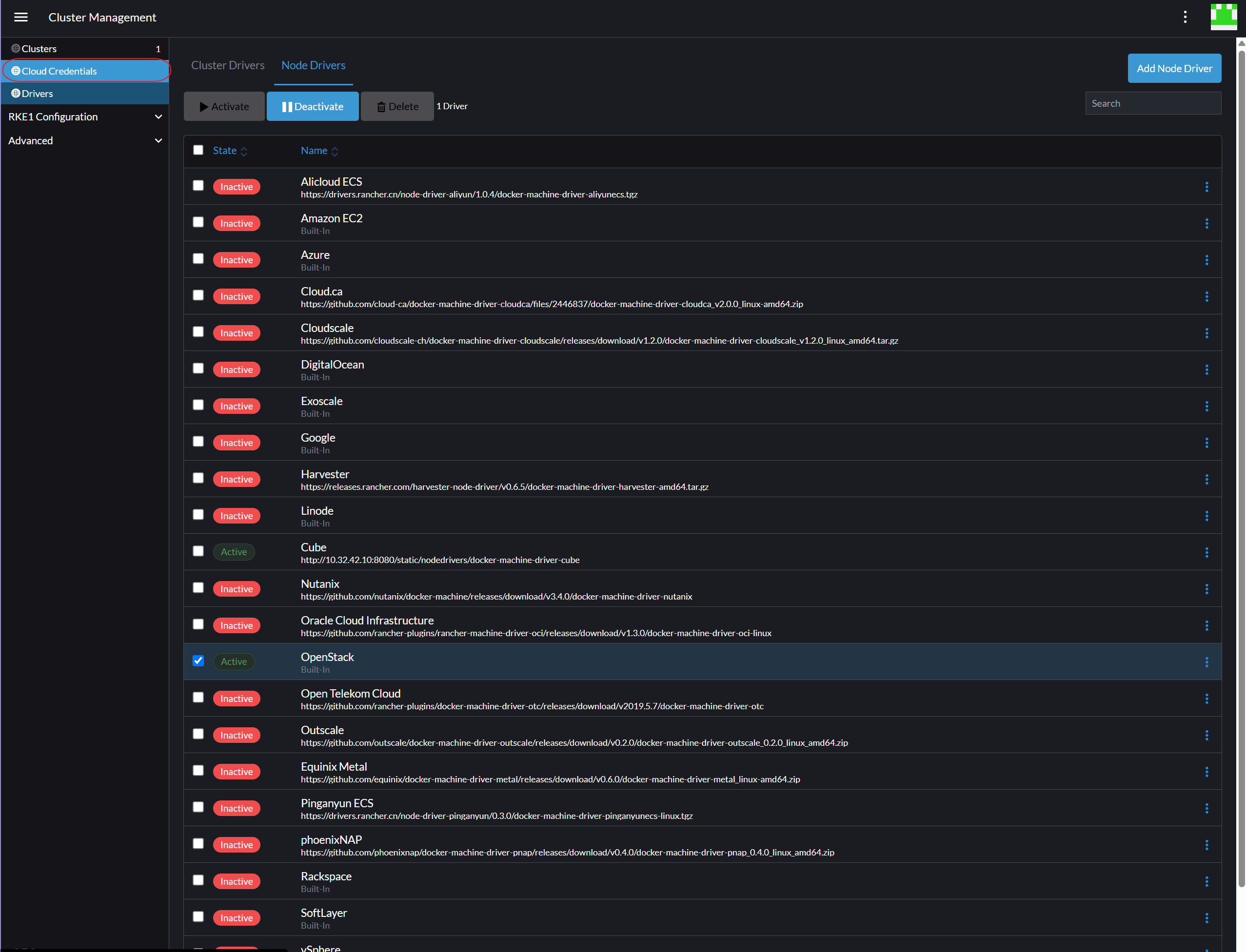

Navigate to

Drivers.

-

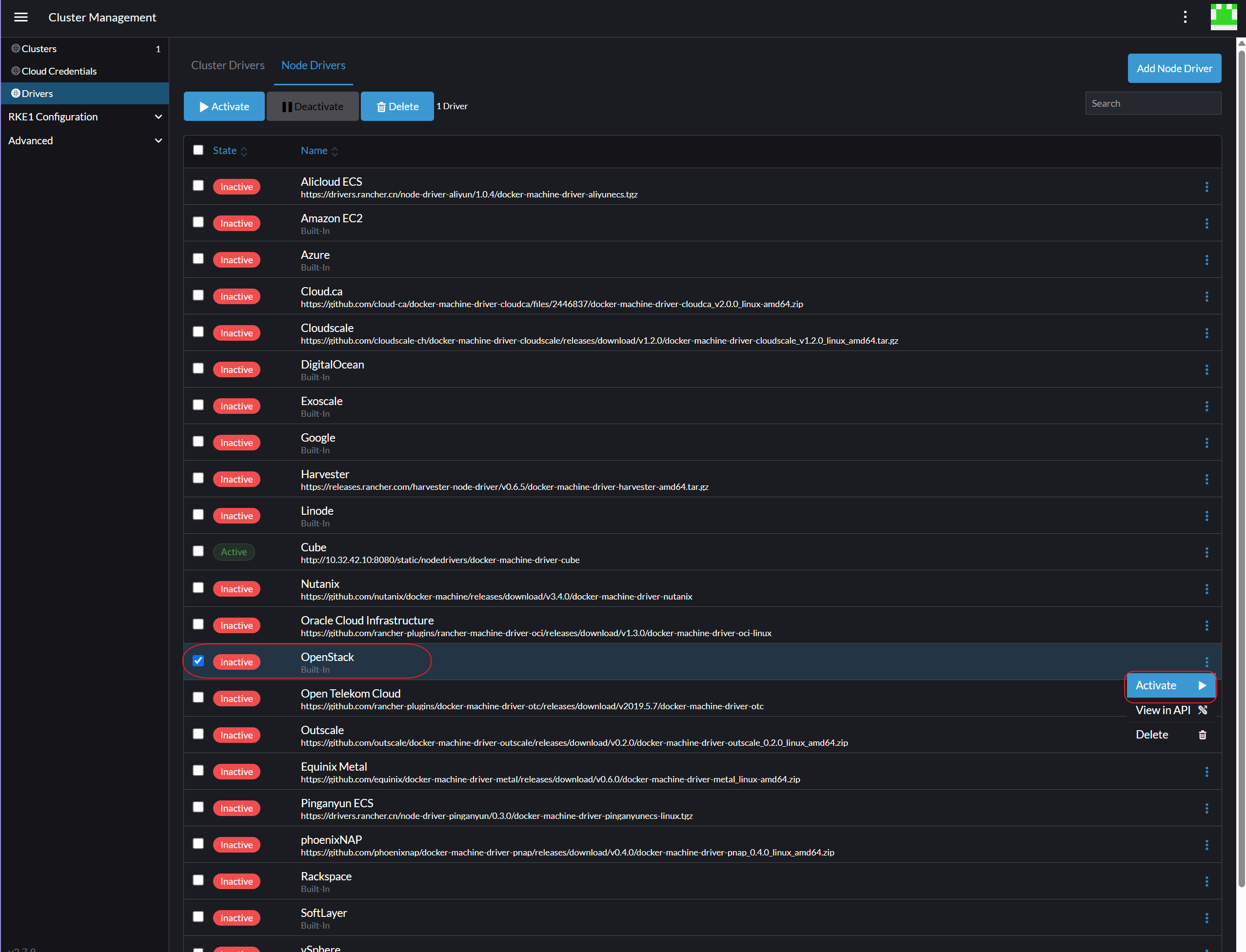

Switch to

Node Driverstab.

-

Select

OpenStackand chooseActivefrom its action options.

-

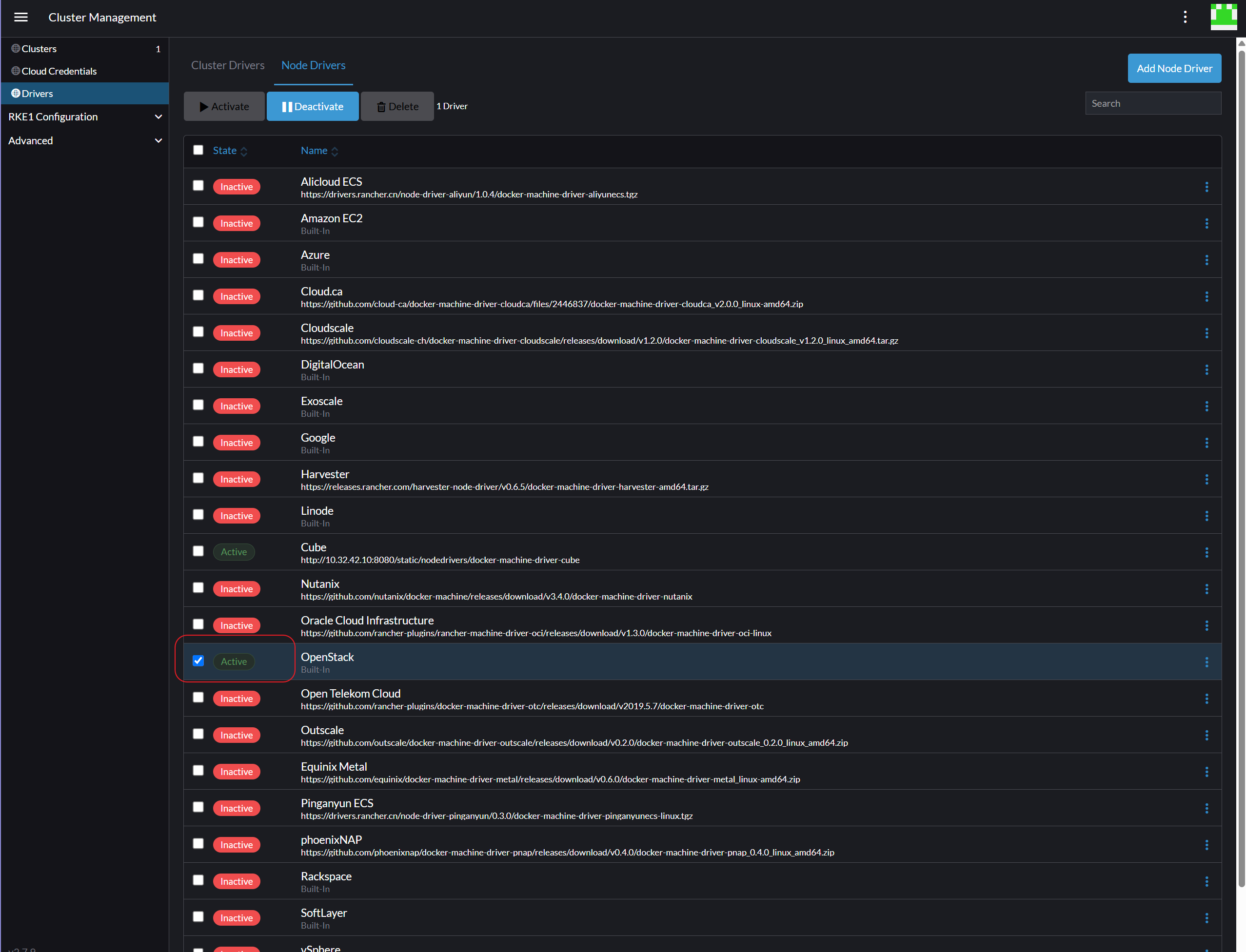

Verify by its

StatebeingActive.

-

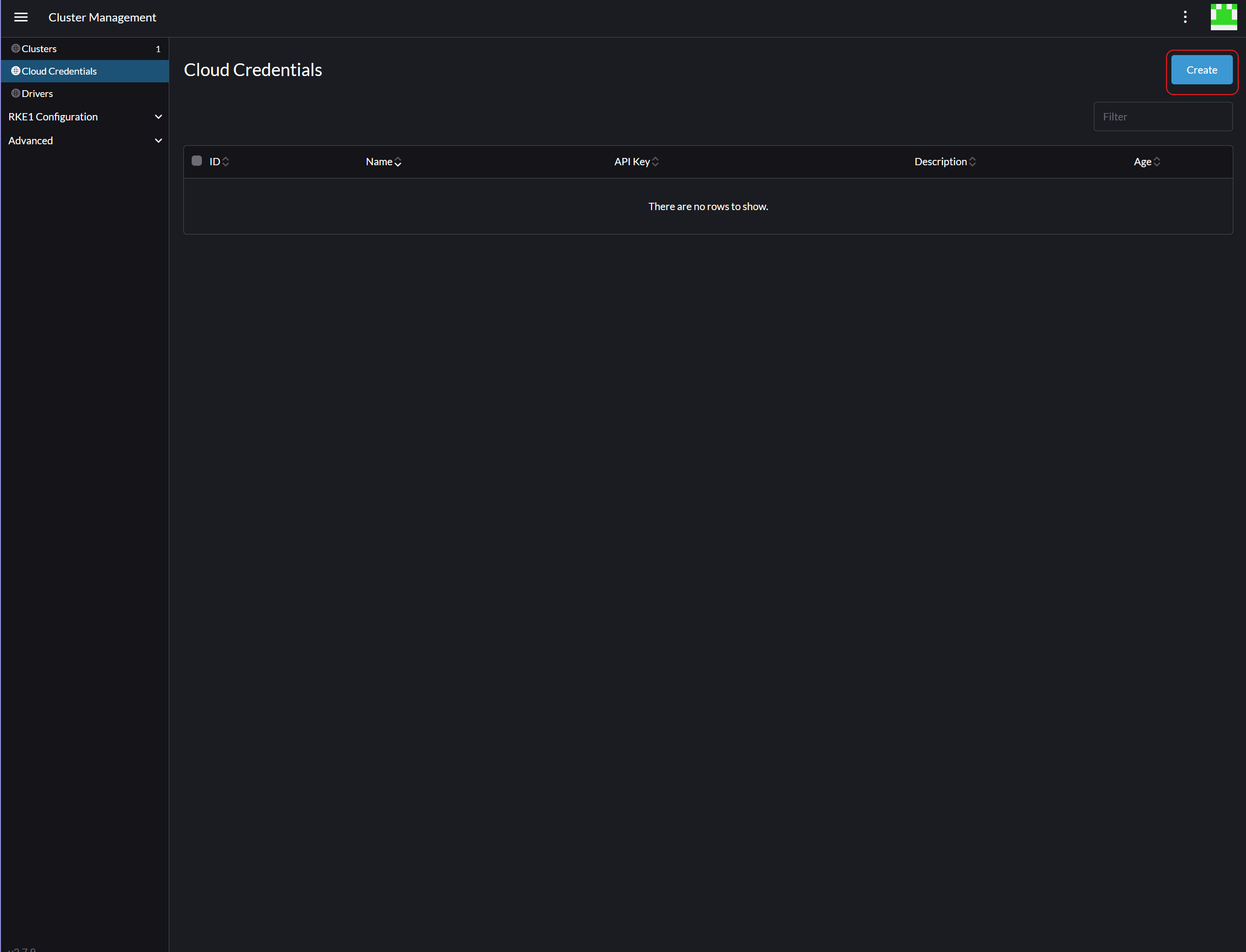

Navigate to

Cloud Credentials.

-

On the top right corner, click

Create.

-

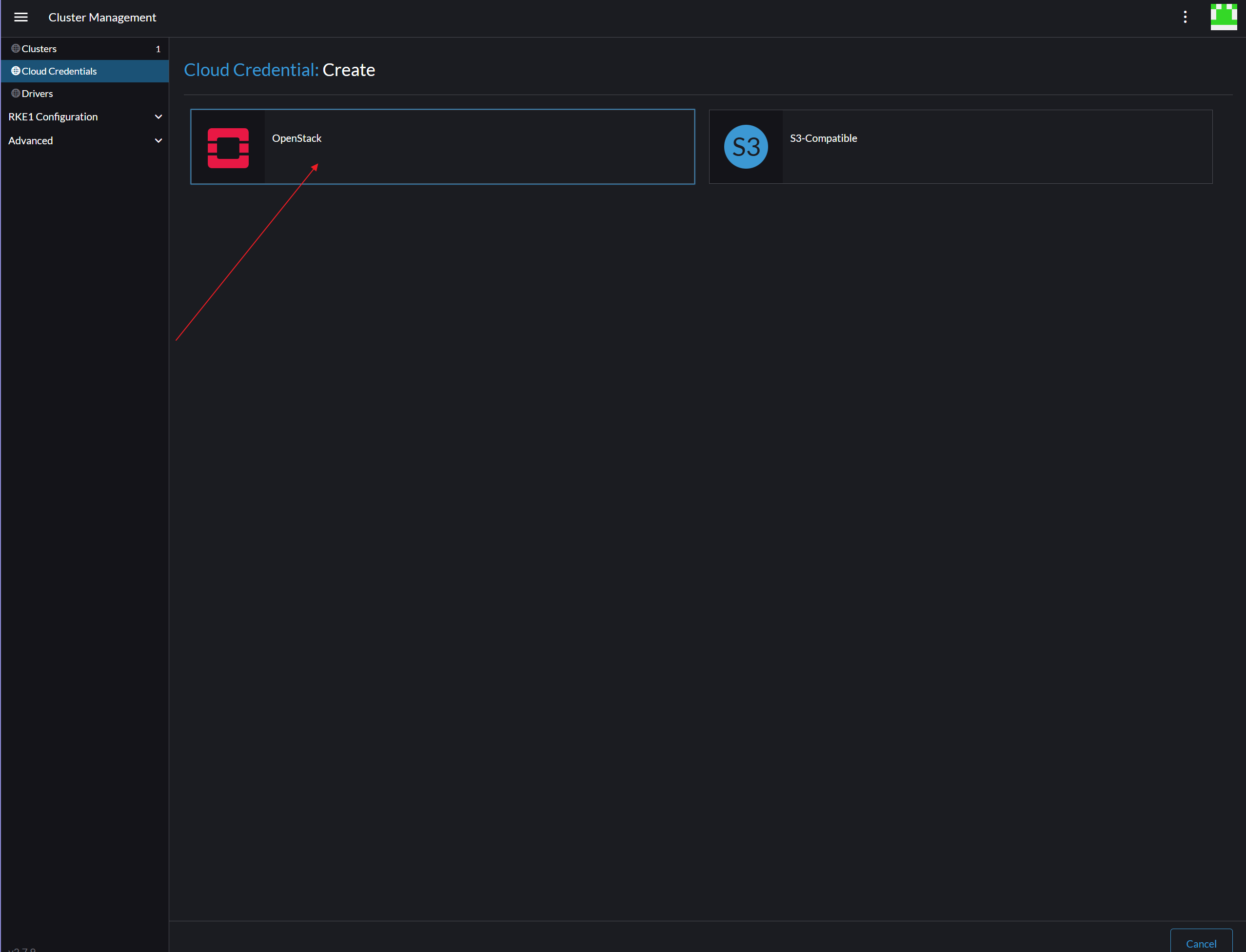

Choose

OpenStackas Cloud Credential template

-

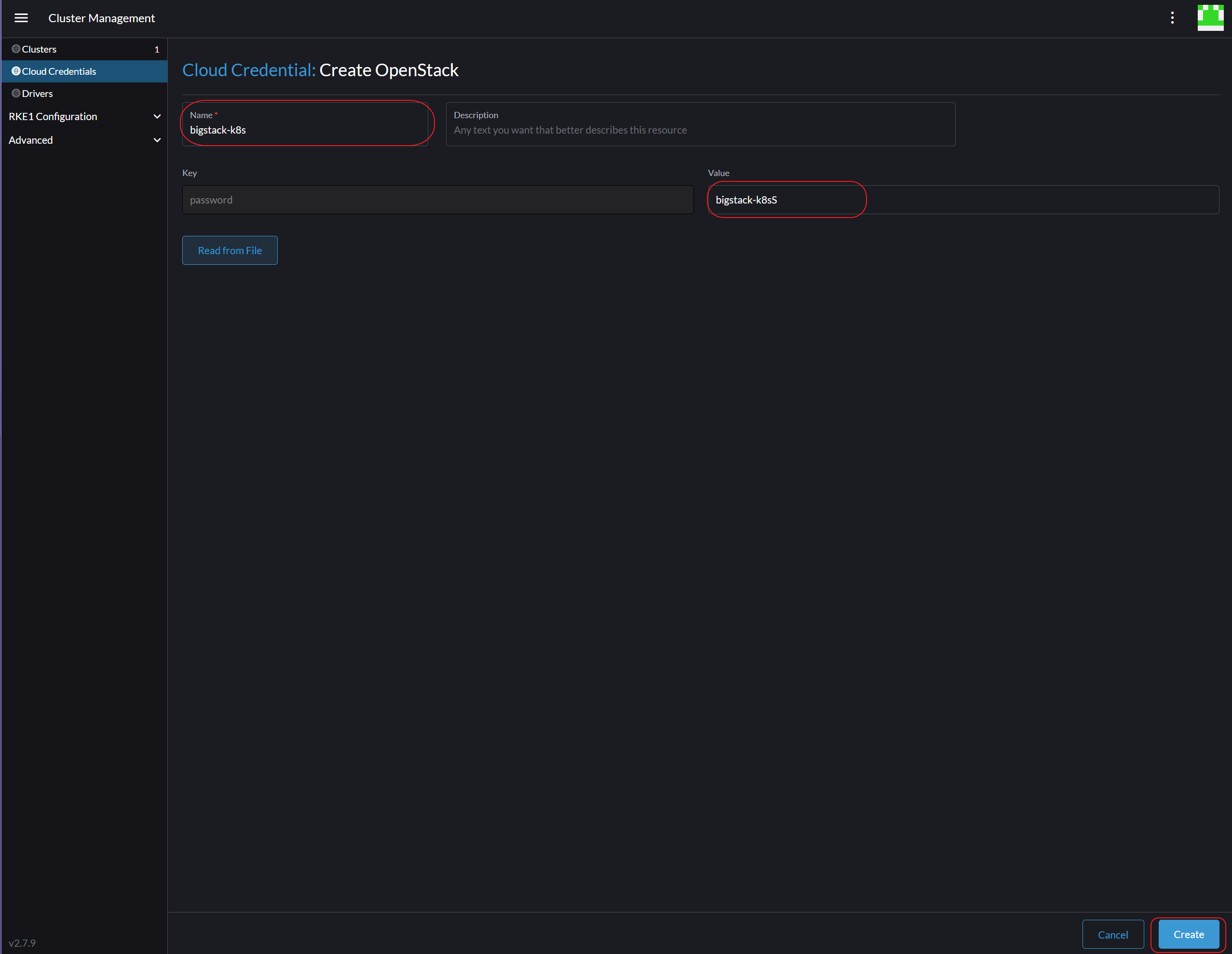

Fill in

user nameasCloud Credential Name, along withuser passwordasvalue of key - password, clickCreateto proceed.

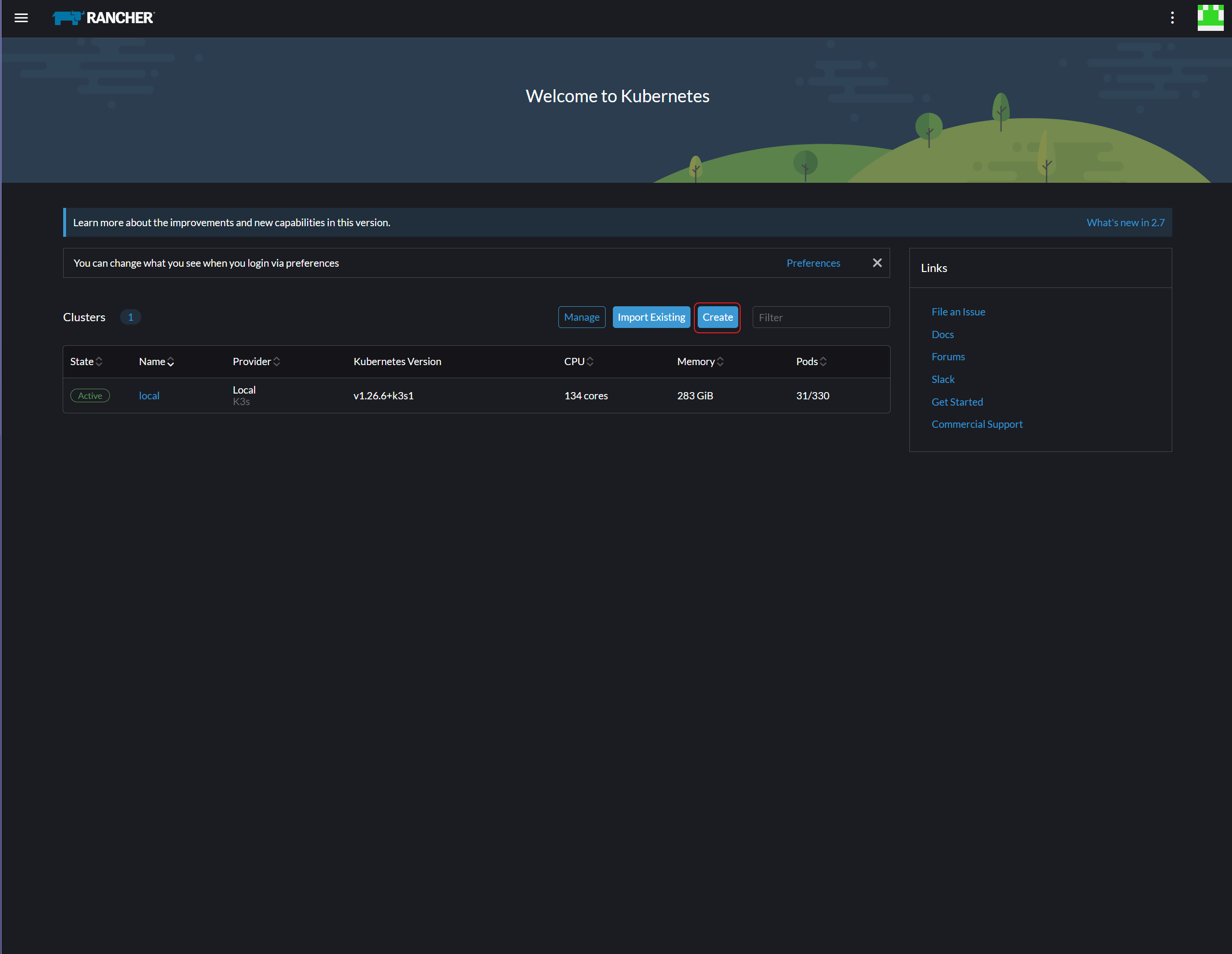

Cluster creation

-

Go back to home page of Rancher and click

Create

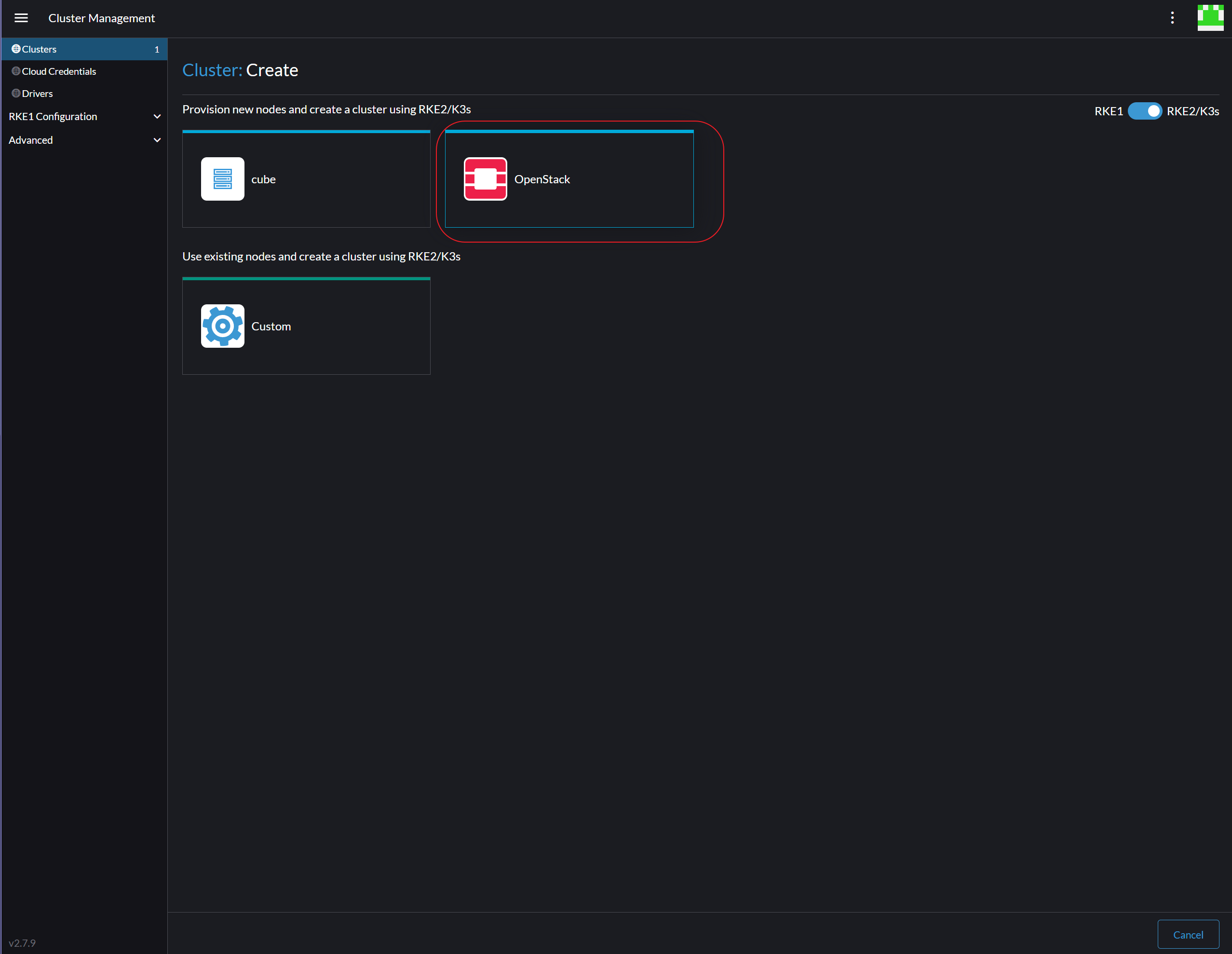

-

Click

OpenStack

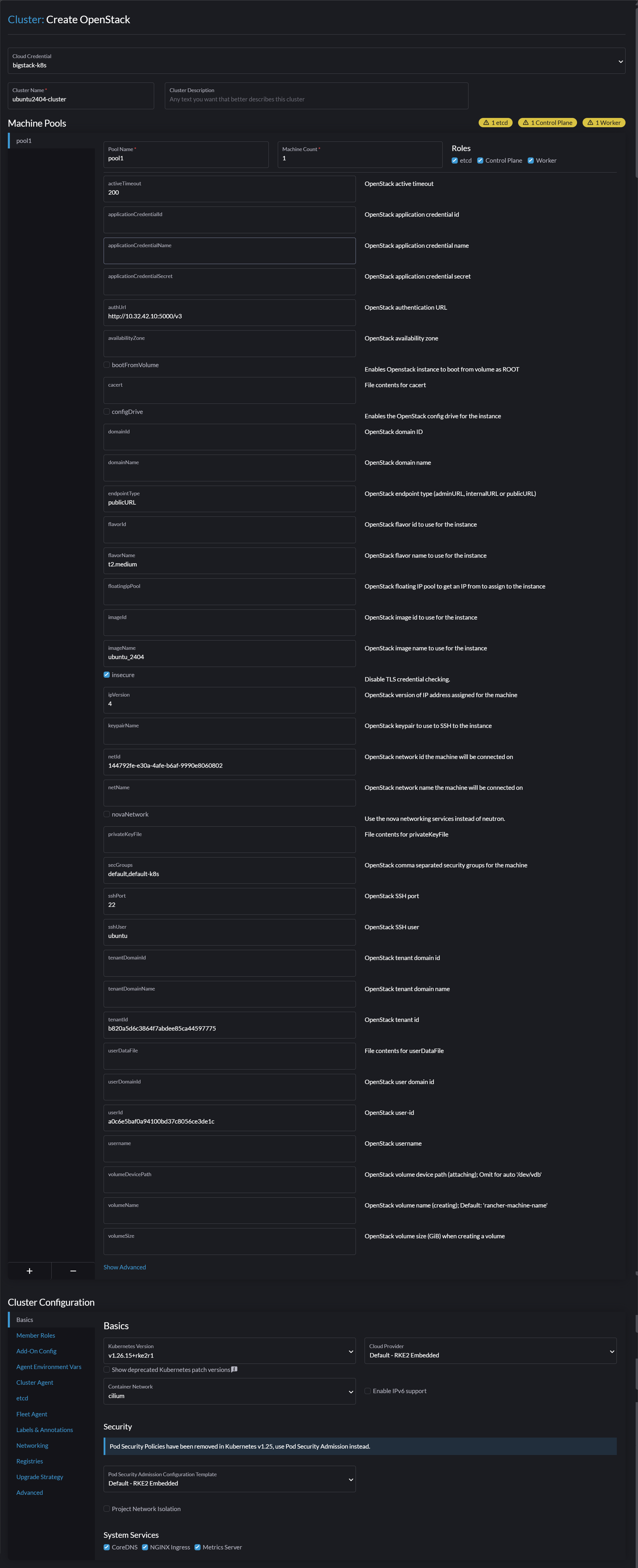

-

For this creation, following cluster information is required

- Cluster Name (any)

- OpenStack active timeout (200)

- OpenStack authentication URL

http://<CUBECOS-VIP>:5000/v3 - OpenStack endpointType (publicURL)

- OpenStack flavor name to use for the instance (recommended t2.medium or above)

- Openstack image name (ubuntu_2404)

- Disable TLS credential checking

- OpenStack version of IP address assigned for the machine (4)

- OpenStack network id the machine will be connected on (private-k8s from project bigstack-k8s)

- OpenStack comma separated security groups for the machine (default, default-k8s)

- OpenStack SSH port (22)

- OpenStack SSH user (ubuntu)

- OpenStack tenant id (ID for project bigstack-k8s)

- OpenStack user-id (ID for user bigstack-k8s)

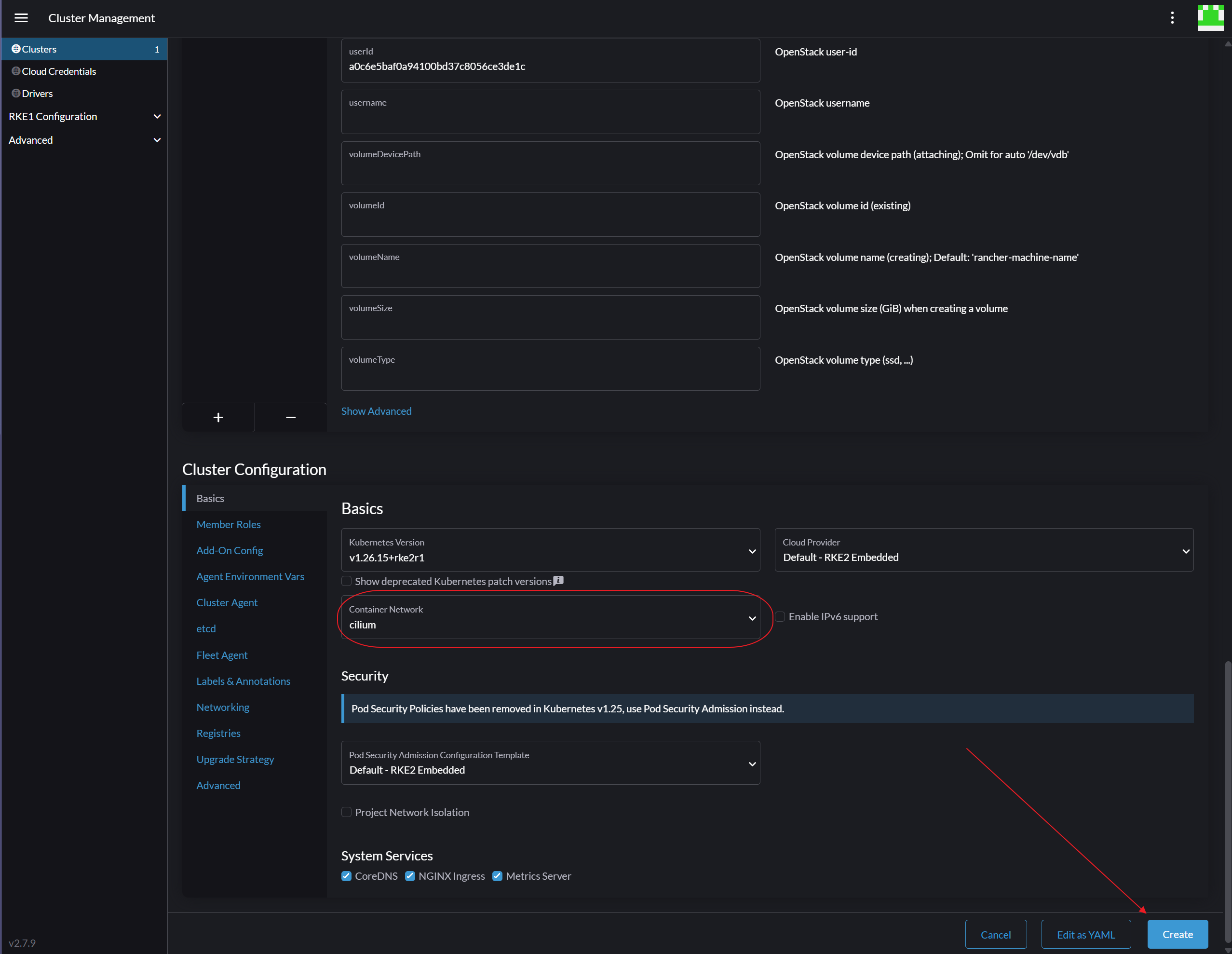

-

Change Container Network to cilium, then click

Create

-

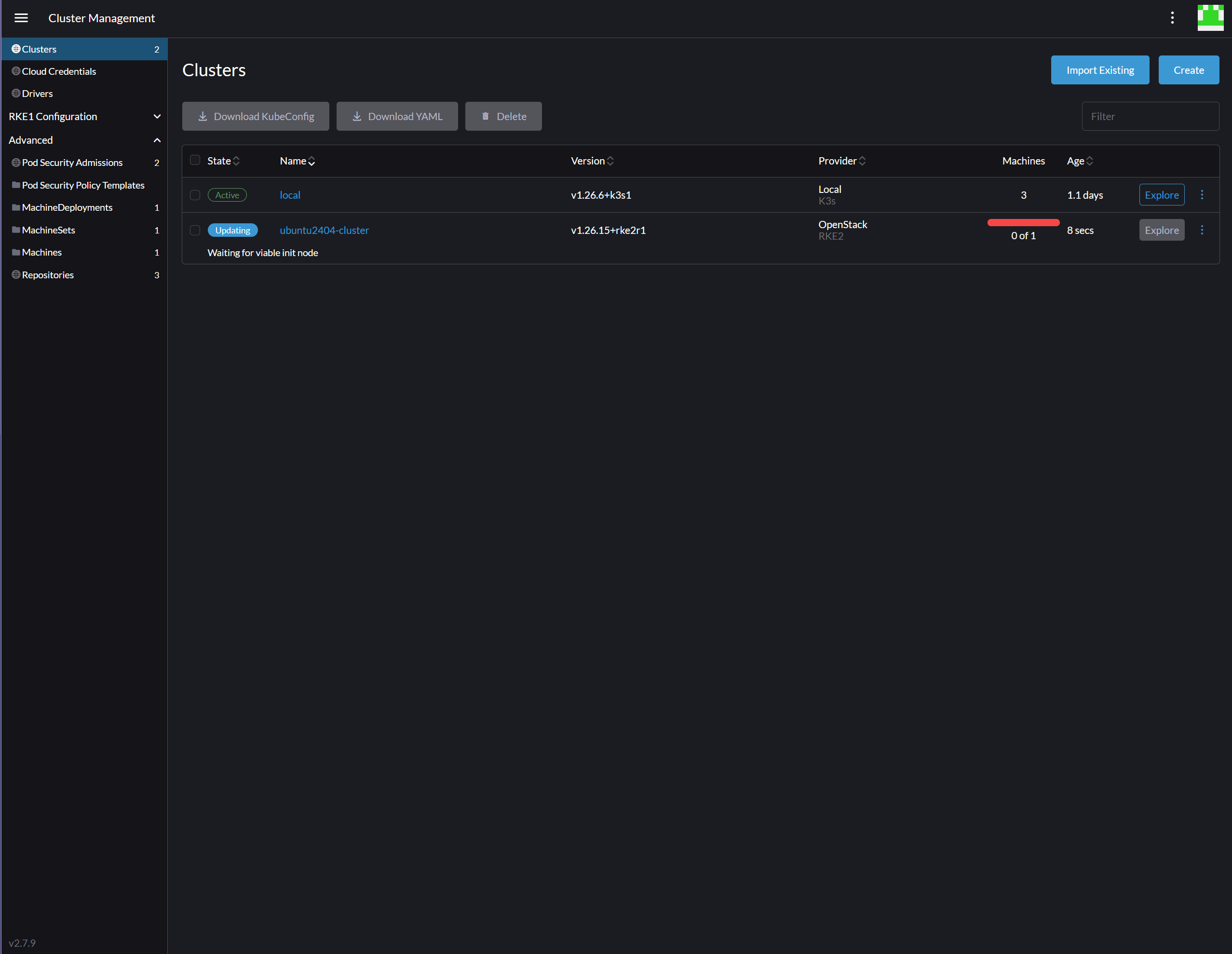

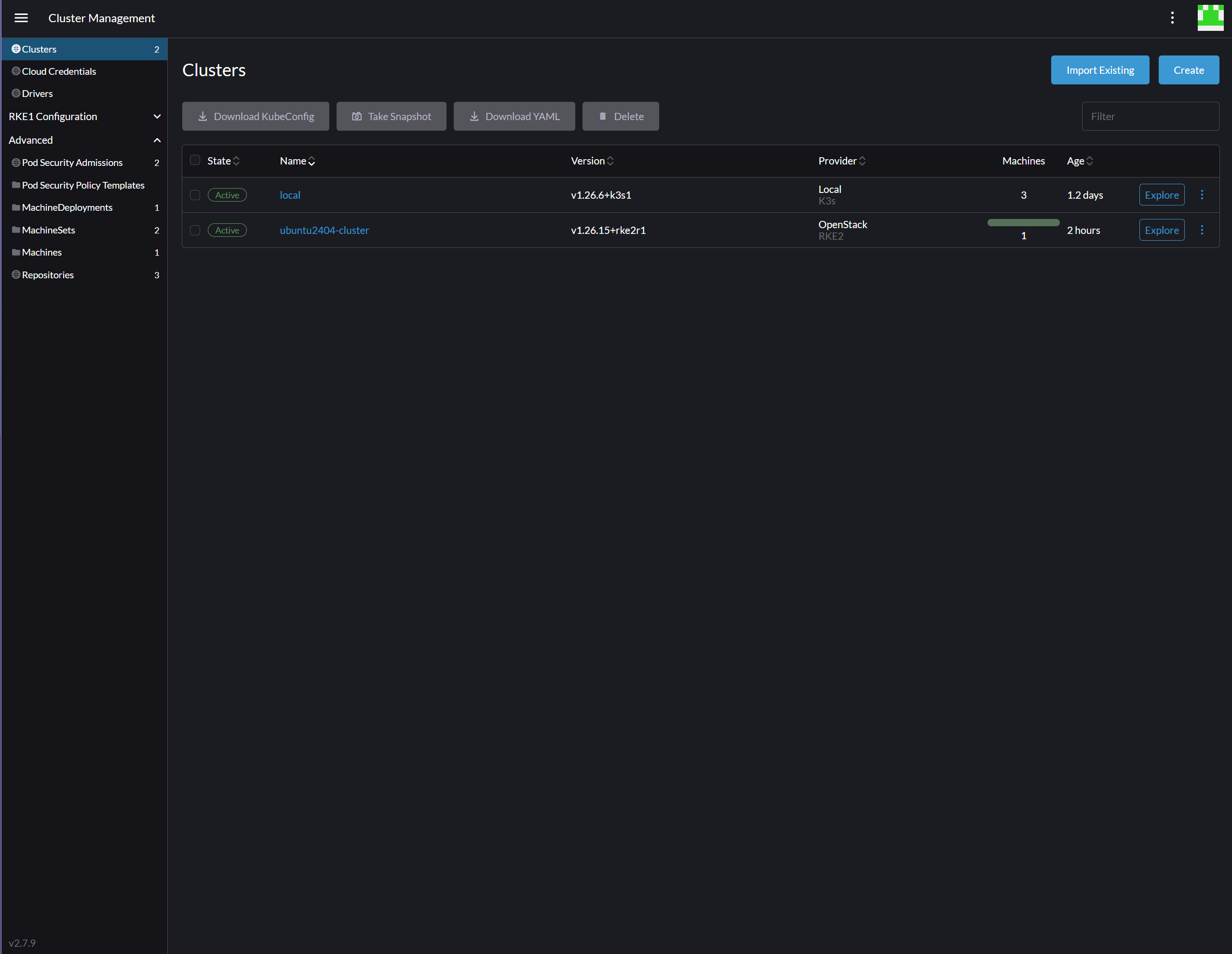

Wait till Cluster State become

Active